Pre-testing Advertising: Is it a Little Silly?

Opinion

This is a article I wrote recently for B&T magazine – kind of antagonistic by proxy.

I was recently asked by ABC radio to name the four big unanswered questions in our field.

One of the questions I posed was that we don’t know if a campaign or idea is going to work. I was excited about this concept. But, I then came across an article by Millward Brown exclaiming they had the answer. I immediately grew despondent.

However, buried in the comments on this article was a forthright reply by James Hurman, MD at Y&R New Zealand: “How was this little sample

of 1,795 cases chosen from Link’s database of 45,000 case studies? And why is it only consumer packaged goods? And finally – independent research in the US, UK and Germany all shows that 70% of TV ads create a short term sales effect – so although a positive Link score might give you

a fraction better chance of achieving this already highly likely outcome, what are you sacrificing

in terms of efficiency of spend (because with a rational piece of marketing messaging you’ll have to spend many times as much to stand out) or long term brand building (which as MB themselves point out, is compromised by short-term sales driving tactics and ‘persuasion scores’)? There have been three independent studies of pre-testing research since it was invented in the 1950’s and they all show a negative correlation. Would MB consider an independent study of their full Link database?”

Hurman also suggests the concept of pre- testing advertising has no predictive validity in a presentation, in which he quotes the IPA as saying “beware of pre-testing”. The IPA says ‘ads which get favourable pre-test results, actually do worse than ads that didn’t’.

I’m not writing this to have a go at Millward Brown or any other pre-testing research company. It’s a good thing there are people attempting to understand what makes advertising work.

What I guess I’m partially excited about is that we are beginning to lift the rug on why it is that things like pre-testing don’t work.

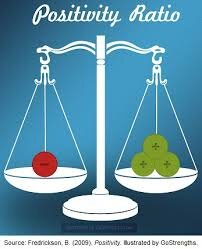

We, through behavioural economics, are getting better at realising what people say and do are very different things. What people say they like, and what they actually like are very different things. They (we) are incapable of understanding what we like and don’t like, or what will have an impact on us.

If you need sobering up, look up ‘cognitive bias’. We all make cognitive biases (thinking errors). It’s these errors that make trying to work out if an ad will work via pre-testing potentially no better than having an educated guess.